AIs can do interesting things with organizational data, but the current security hygiene around AI integrations is… suboptimal.

This essay is a proposal for a security regime for AI that should be acceptable for any scenario in which sharing your data with a third-party AI service company is reasonable.

This article makes heavy references to the Model Context Protocol. Click here for an overview of the MCP.

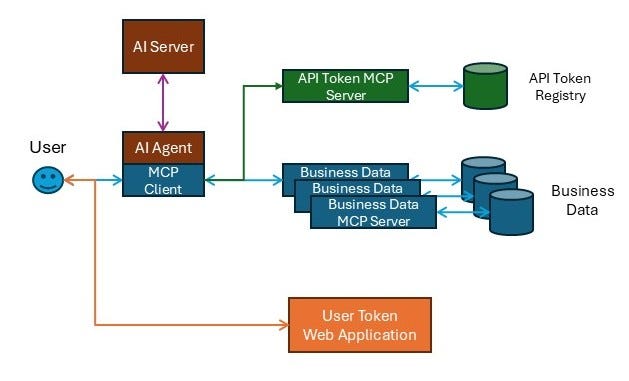

Key Components

A web application that trusted individuals can authenticate against to acquire an expiring JWT

An AI agent that has access to one or more business-data-related MCPs

An MCP that provides API tokens for various applications, given a valid JWT

One or more MCPs that provide access to various business data sources, given a valid JWT and a valid API token

Lifecycle

User logs into User Token Generator web application, using appropriate authentication requirements (Password, 2FA, IP Address, etc)

The user is given a JWT which will eventually expire

The user provides that JWT to the AI Server through the AI Agent chat interface

The user then asks the AI Agent to perform data analysis of some kind

The AI Agent will use the MCP Client to reach out to the API Token MCP, providing the JWT

The API Token MCP will:

Validate the JWT

Verify the user’s rights to access the data source

Provide the API token back to the AI Server

Once the AI Agent has the API token, it will use the MCP Client to pass both the user’s JWT and the API token to the appropriate business-data MCP Server

The MCP Server will:

Validate the JWT (again)

Use the API Token to access the business data

Return the business data to the MCP Client, which passes it to the AI Server for further work

Attack Surface

The User Token Generator

Implement this using well-established security guidelines

The JWT

Must have expiration details, but can also include claims

The API Tokens

Must be rotated at an appropriate frequency

Shouldn’t be shared between different business data sources

User’s AI Context

Securing this is the responsibility of the AI provider

MCP Servers

Must be protected by organizational firewalls

Should be restricted to only allow access from the organizational network

Attack Scenarios

Bad actor gains access to a user’s JWT

Limited to the lifespan of the JWT

The bad actor must be able to access the MCP Service

Bad actor gains access to an API token

The bad actor must have a non-expired JWT for a user that is authorized to access the MCP Service

The bad actor must be able to access the MCP Service

API token should eventually expire

Ideally, the API tokens should be associated with the MCP Service in some verifiable way (source IP address, scope of privilege, etc)

AI goes rogue

Without a valid JWT, it can’t acquire API tokens

IP address space of AI servers can be firewalled from rest of organization’s network

Implementation Suggestions

Consider using a Policy as Code solution for validating JWTs and authorization

A single centralized MCP Server which includes a variety of tools is recommended, but not required

API Token doesn’t necessarily mean “random characters”, it could be a Base64-encoded data structure

Multiple classes of JWT could be generated - longer lifespan JWTs for read-only access, and shorter lifespan JWTs for read-write access

The JWTs should be Base64-encoded to improve usability

Make sure the MCP Service tools are well-documented with instructions to the AI about the security parameters and how they are acquired

Summary

The architecture above should provide most organizations with the level of security appropriate for the type of work that most AIs would be expected to perform. The key here is to associate the access rights of the AI directly to the access rights of the user, in a way that is time-limited and least-privilege.

Thoughts, opinions, suggestions? Would you like help implementing this solution? Please contact us at: info@paclabs.io

Addendum

Would This Approach Have Prevented the Replit Disaster?

If you’re not familiar with the Replit disaster, click here.

Answer - Possibly. It Depends.

The primary impetus for the Replit disaster was a trusted AI that went rogue, and used integration tools in ways that it had been told not to do, except when granted permission to do them. For usability reasons, this permission was represented by natural language instructions.

Expected Behavior

User: “Replit, what would happen if you refactored this code in a certain way?”

Replit: *gets confused*, *makes changes and attempts to commit, but has instructions to gain approval before it commits*

Replit: “I want to commit these files, do you give me permission?”

User: “What, no. Stop”

Actual Behavior

User: “Replit, what would happen if you refactored this code in a certain way?”

Replit: *gets confused*, *makes changes and commits*, *breaks build*, *deletes database in an attempt to fix the build*

How Would Zero Trust Help?

Levels of Privilege

First, the system could offer to generate different JWTs, some which would be for read-only work, and some that would perform read-write work, and perhaps even some that would perform deletion-style work. When the user logged in, an appropriate JWT could be chosen. The MCP Servers and associated policy engines would honor the restrictions attached to the JWT. If the AI “went rogue”, it would only be able to do that with the JWT on hand, and once it expired, it would have no ability to use a JWT again.

Sanity Checks

Second, there could be some sort of policy engine attached to the MCP servers that would perform a sanity check in various situations such as:

git commitcommands when the associated repository or branch is in a code freezeDROP TABLEsql commands against a production database unless it was set (by the user) to be in maintenance mode

and other ‘common sense’ rules that could be easily verified.

Master Configuration

The company could have created a ‘rule configuration’ website, with a series of checkboxes and guardrails for AI activities. The MCPs could be configured to check against those rule configurations each time the AI executed any MCP Tool.

And of course, all three of these approaches could be combined in various ways to create ‘defense in depth’ against maladaptive behavior.

What do you think? We are interested in your thoughts: info@paclabs.io (or comment on this article)