An Overview of Model Context Protocol

The Standard Mechanism for Integrating Organizational Data with 3rd Party AI Systems

The Model Context Protocol is the open standard for integrations between Generative AI systems (such as Anthropic, Copilot and OpenAI). Recently, Google has pledged to support the MCP as well. It appears that this protocol is going to be the default way that users create interactions between the AI systems and external data (in this case, “external data” means anything that isn’t embedded in the AI system’s existing knowledge base.

This article is meant as a basic overview of the concepts. Other articles that go into deeper analysis of security, enterprise deployment and other use cases are forthcoming.

How It Works

The MCP defines a JSON-RPC schema for communications between MCP Clients and MCP Servers. There are two main capabilities of interest:

Discovery - how an MCP Client learns about the existence of an MCP Server, learns what functionality (or in the parlance of MCP: “Tools”)is offered by the MCP Server, and finally learns what data is required to use those Tools, and what type of data will be returned by those tools.

Invocation - how the MCP Client assembles the necessary parameters, invokes the appropriate Tool, and then collects the data output of that invocation.

The Big Picture

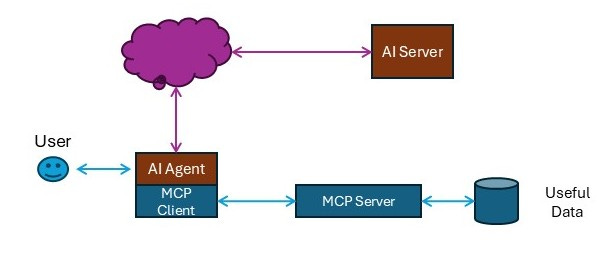

Of course the interactions between the MCP Client and Server are a relatively small element in the system involved in a user interacting with an AI Server. The following diagram shows a more concrete example:

In this approach, a User interacts with an AI Agent (for example, a chat interface such as ChatGPT), which provides either a local interface (such as Claude Desktop or VS Code) or a web-based interface (again, such as ChatGPT) for communications between the User and the AI System.

Note: this is just a common reference example. It is possible to host the AI Server locally. It is possible to host the AI Server inside an organization.

Setting up an MCP Client/Server Relationship

Baseline: For various security reasons, it is typical (and probably best) for the AI Agent to operate locally.

The AI Agent must be configured (typically via a config file) to know about the existence of the MCP Server.

The AI Agent is restarted, detects the updated config file, and uses the data in the config file to reach out to the MCP Server

Assuming the configuration is valid, the MCP Client asks the server for the manifest of Tools

The MCP Server responds with the Tools, and natural language instructions on how the Tools are invoked.

One important observation that is counterintuitive to most software developers: the natural language instructions are what is used by the MCP Client to configure the calls to the Tools. There is no formal schema. Just descriptive text.

Once the MCP Client has established the availability and capabilities of the MCP Server & Tools, it (typically) asks the User to approve the use of the Tools.

Assuming the User approves the use of the Tools, the User can now use the natural language chat interface to instruct the AI Agent to invoke a Tool.

Normal Use

The User types in some sort of natural language request to invoke a Tool as part of a request.

The AI Agent passes this natural language text to the AI System, which processes it, recognizes the instructions embedded in the natural language

The AI System then commands the AI Agent to invoke the appropriate Tool, providing the Agent with the necessary data to perform the invocation.

The Agent calls the MCP Client to invoke the Tool

The MCP Client passes the appropriate data to the MCP Server

The MCP Server invokes the appropriate Tool, which performs some sort of coded action (such as fetching data from a URL, executing a database command, looking at files on the local file system, etc)

The Tool completes its work, and provides any resulting data to the MCP Server

The MCP Server returns this data to the MCP Client

The MCP Client returns this data to the AI Agent

The AI Agent returns this data to the AI System

The AI System analyzes this data in the context of the the User’s original request (step 1 above)

The AI System generates a response and sends it back to the AI Agent

The AI Agent presents the response to the User

Caveats

The User does not need to be human, it could be a software entity of some kind

The Response data could be just about anything, although it will probably be difficult to pass binary data back and forth because of the nature of AI System processing.

There are three ways that the MCP Client and Server can communicate:

STDIO

Server-Sent Events

Websockets

Typically, STDIO would be used for PoC implementations, while Websockets would be used for most “enterprise-grade” communications.

Technical Challenges

The Model Context Protocol does not have any authentication or authorization built-in. It is the responsibility of the MCP Server or the downstream systems to handle these requirements

The MCP does provide a TLS encrypted communications channel between the MCP Client and Server, and the AI Agent to AI System communications channel is similarly encrypted.

The instructions on how the MCP Client is to invoke the MCP Server (especially the instructions related to the parameters and the response data) are written in natural language, and thus subject to potential misunderstandings. Clarity is important here.

The AI System is limited in how much data it can process in one session, so large data sets can cause unexpected results

Benefits of the Model Context Protocol

The benefits are considerable:

The Client/Server configuration is relatively easy to set up

It is reasonably straightforward to secure the MCP Server and the data (via expiring tokens of various sorts)

This mechanism will dramatically increase the usefulness of AI System processing of private data

Thanks

If you have questions or feedback, please contact us at: info@paclabs.io