Interactions between Policy as Code and Artificial Intelligence

Three things to consider when looking at your security architecture

The security model around AI Agents and their interactions with humans can be tricky. In our interactions with customers, we currently see three consistent concerns that deserve further investigation:

Generating Policy logic from natural language

Implementing AI Governance Policy

How policy interacts with the Model Context Protocol

Note: Click this link if you’d like to learn more about the Model Context Protocol

Target Audience

The target audience for this essay consists of two related groups:

People who wish to use agents to generate their policy logic

People who want to build policy-based Access Control infrastructure to “constrain” agents under their responsibility

If either of these concepts seems interesting to you, please read on.

Generating Policy Logic from Natural Language

One of the visions of AI is the elimination of ‘routine’ coding tasks, replacing them with agent-generated logic. The failure cases of this approach are well-documented, but even so, it is clear that in many languages, agents can create viable prototype implementations. The key to success here is the volume of existing code - mining the entire public codeset of GitHub as a source of examples to use for agent-based extrapolation.

For Policy as Code, this may be difficult, for a couple of reasons:

Access Control is in the security domain, and security is some of the most ‘mission critical’ code for an enterprise, and agents continue to be notorious for making subtle mistakes during code generation.

The two primary languages of Policy as Code - XACML and Rego, both have relatively miniscule representation in the public codebases, which make it difficult for agents to use as a guide

Over time, this second limitation will fall away as Policy-Based Access Control (PBAC) becomes more established in the enterprise. One technique that can be of assistance here is Retrieval-Augmented Generation (RAG), which can add libraries of policy logic into the AI Agent’s knowledge base. The first issue can’t easily be avoided, but there are some ways to take advantage of agents, if you lay the proper groundwork ahead of time:

Have one or more security-minded humans design the API for the PBAC logic.

Identify the namespaces, the capabilities and templates of the payloads that will be sent back and forth

Implement a comprehensive set of unit tests against this API to verify security compliance.

One tool that can help here is the open-source Raygun, which abstracts away some of the tedium involved in policy test generation

Provide documentation for the API, and the unit tests to the agent, and it should do a reasonable job of implementing policy logic that satisfies the requirements

Note: While you do not have to have unit tests for every possible edge case, the more you have, the better.

Once you have the generated policy logic, you can use the testing infrastructure to verify the correctness of the policy logic, and either have it regenerated by the agent, or fix it by hand.

Implementing AI Governance Policy

Another key concern with enterprise-grade AI is ensuring that the agents are prevented from “going rogue”. For example:

Accessing forbidden websites/APIs/services

or accessing services that are outside of the appropriate scope

Accessing services with deceptive credentials (misrepresenting the source of the request)

Luckily, this is an excellent use case for Policy as Code.

Establish a security gateway proxy, through which all agent-originated requests must pass

There should only be two ways that the agent can interact with the external network:

The customer interaction API (such as the chat interface)

The security proxy

The Security Proxy should have a defined API for requests and responses, which include at least:

A token that identifies the agent

A token that identifies the customer

Preferably a short-lived token, such as a JWT

The destination URL

Any additional request data

Instruct the agent on the Security Proxy’s API

Define a set of Access Control policies, which define:

The universe of allowed URL destinations

The logic that defines which destinations are allowed for various customers

This approach should provide a solid foundation for governance. The customer-interface agent can generate the customer-specific token. The set of approved external destinations can be easily managed, and establishing the scope of capabilities for different customers with different access privileges is consistent with any other PBAC implementation.

Example Implementations

There are a couple of security patterns that will work well however you want to implement policy restrictions on your agents. These are some simple example diagrams that help visualize the approach

Integrating Policy with MCP Solutions

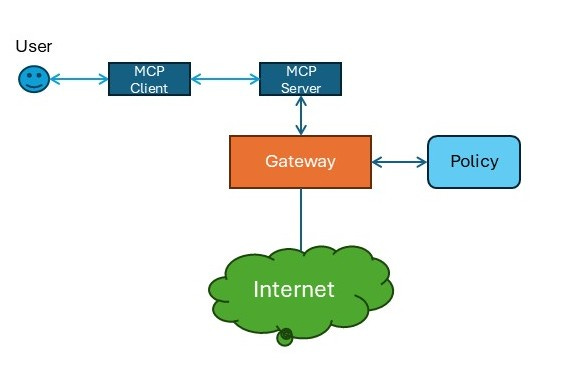

Standalone Gateway

In this approach, the MCP server for external traffic must use some sort of proxy for all Internet traffic. This gateway will use a policy system to verify each request. This is a straightforward, generalized solution, but it will be difficult to ensure that the gateway and policy system have all the data they need to make fully-informed decisions.

Note: this approach assumes the MCP server is in a managed network configuration, and that the gateway is capable of interacting with some sort of policy system to make decisions.

Note: this approach is also constrained to the protocols supported by the gateway (i.e. a web gateway can only be used to constrain web traffic, not SQL, gRPC, etc)

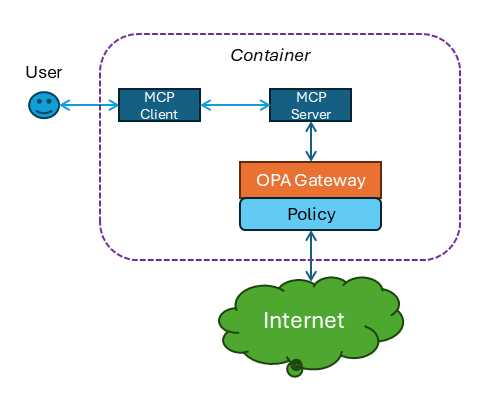

Containerized Solutions

In some cases, agents and their associated MCP support systems are deployed as containers. Centralized network gateway solutions would be a significant scaling problem. In this scenario, we recommend embedded the open source OPA as part of the container. Ensure that the MCP Server can only interact with the client and the Gateway. The gateway will enforce policy.

Like the previous diagram, this approach is limited to the type of traffic that can be enforced by the gateway, and will be limited by the data supplied by the MCP.

Note: this diagram uses OPA as the gateway, which is not required. As long as the gateway can interact with a policy system to enforce access control, any gateway is viable, as is any policy agent.

Note: While the policy can be hard-coded as part of the container, it is also possible with tools such as OPA to centralize the policy, and allow the policy agents inside the containers to fetch the centralized policy as required.

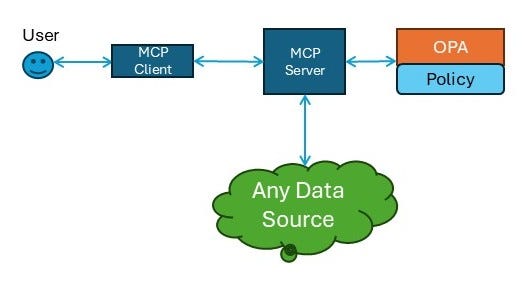

Policy-Integrated MCP

This is an excellent approach to generalizing agent security. In this approach, the MCP server directly interacts with a policy agent (such as OPA), which can make decisions that can be enforced directly by the MCP server. At the time this document was written, we are not aware of MCP servers that provide this functionality (yet), but the benefits of this approach are obvious.

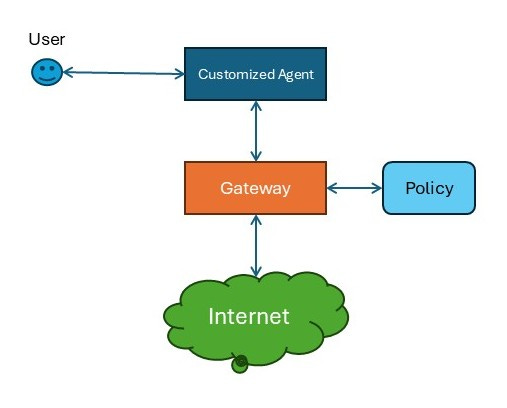

Integrating Policy with a Custom Agent

If your AI Agents are deployed without the benefit of MCP, how can you take advantage of Policy as Code?

In this diagram, you must constrain your AI Agent via firewall rules to only allow access to the gateway for external traffic. Then, much like in the previous examples, the gateway can use policy to make decisions about the Agent’s requests.

Again, this approach is limited to whatever data protocols are supported by the gateway, and the gateway must have some mechanism to interact with policy engines.

If the agent is supported by custom integrations for data access, those custom integrations can be modified to become the mechanisms for enforcement.

Other Configurations

These are just some of the more common configurations we have discussed with customers and peers. Other configurations are viable, perhaps with some customized software support.

Bonus: Build Your Own Security Gateway with OPA and Rego

The Open Policy Agent (OPA) can potentially be used as exactly the kind of security gateway described above:

It can validate tokens and parse JWTs

It processes a mixture of policy logic and dynamic policy data, allowing for flexible capabilities over time

The policy data and rules can be centralized and fetched dynamically by the policy agents in the field

It can access URLs, provide request data and accept response data

It is open source

It scales extremely well

Going into details about the implementation of this approach is beyond the scope of this article.

Thanks

We hope this has given you a useful perspective on the intersection between Policy and AI Agents. If you’d like to discuss further, feel free to contact us at: johnbr@paclabs.io